Introduction

When Android OS has been ported to MIPS RISC architecture it was noticeable that multimedia applications were not running as smooth as Android OS. Most of multimedia app originated from PC world and were ported to ARM RISC architecture in order to run smoothly on Android OS. Therefore, we were engaged by MIPS technologies (and later by Imagination Technologies) to perform optimization of many multimedia libraries. Since there is a huge amount of multimedia libraries that required optimization, our cooperation on these projects lasted from 2010/2011 to 2018 (some code drops to open-source community have continued since).

The optimization process

Algorithm Analysis

Firstly, we are performing partition of algorithm implementation and performance estimation in C. This step can help to understand the program structure and to find optimization goals in higher-level language. In this step, we examine the underlying data types that the program operates. How many bits are there in a single data element? Can multiple data elements be packed into a 32-bit register for SIMD data types?

Profiling

Performance analysis, better known as profiling, is an examination of program behaviour using information gathered during program execution itself. This analysis identifies parts of the program that are suitable for optimization both of execution speed and memory usage. It is necessary to know, which parts of the software solution are most often executed, because there is no point to do optimization of routines that are rarely executed. Performance analysis shows the number of processor cycles required for each function, thus calculating its occupancy.

Compiler Generated Code Analysis

A new compiler that enables code translation for that architecture accompanies the new architecture on the market. Initially, first compiler versions were not perfect in the sense that it failed to produce the most optimal code, so assistance by programmers and hand-coding assembler writing are required in some parts of the routine. Over time, the compiler evolves and improves, and with each successive version, the code that translates is better and more optimized.

Inline Assembly

Using the inline assembly can reduce the number of instructions required to be executed by the processor. Also, it is important because of its ability to operate and make its output visible on C/C++ variables. With inline assembly, it is possible to do partial optimization for speed-critical sections of code.

Before writing the inline assembler, it is necessary to analyse all original routines that will be optimized. It is generally necessary to make modifications to the program structure, data structure, as well as to do loop unrolling in order to prepare the original code for vectorization and optimizations. For example, we had to carry out in parallel on operands packed into a single 32-bit general-purpose register for SIMD type operations that could be 2×16-bit or 4×8-bit operations.

Tips & Tricks for optimization for MIPS 74k Architecture

In order to do better optimizations of routines, a few specific and important tips and rules need to be followed:

Instruction’s latencies

It is necessary to know the basic latencies of instructions as executed by the 74K core, i.e. how many cycles later can be issued as a dependent instruction. For these purposes, there are four classes of instructions

- Simple ALU instructions – run in 1 cycle. This includes bitwise logical instructions, mov (an alias for addu with $0), shifts up to 8 positions down or up, test-and-set instructions, and sign-extend instructions;

- Simple DSP ASE operations – 2 cycle latency. The same as most regular MIPS32 arithmetic without multiply and saturation;

- DSP instructions, which feature saturation or rounding – three-cycle latency;

- Special DSP multiply – 6–9 cycle latency.

Out-of-order execution

The 74K core supports out-of-order instruction execution, which can automatically reorder instructions to more efficiently pair up instructions for parallel execution and help hide instruction and cache latencies.

Loads and load-to-use delay

MIPS CPUs cannot deliver loaded data to the immediately following instruction without a delay. Typically, data is delivered one clock later, so we can try to put some useful and non-dependent instruction between the load and its first use.

Branch delay slot instruction

The MIPS architecture defines that the instruction following a branch (the “branch delay slot” instruction) is always executed. Also, there are special forms of branches called “branch likely”, which execute the branch delay slot instruction only when the branch is taken.

SIMD

Algorithms are usually described and implemented as a step-by-step processing over scalar data. Most of contemporary general purpose processors have a good support for processing multiple data with single instruction (SIMD). Thus, instead of operating on data of scalar type, an algorithm needs to be converted to process data in vector form. The immediate benefit of processing data in vector form is reduction of instructions required to process data and reduction of memory accesses as data can also be accessed as vectors. The process of converting an algorithm to work on vector data types is not straight forward. A special care should be taken on dependency of data used in consecutives processing steps.

There are four different types of dependency that should be taken care for:

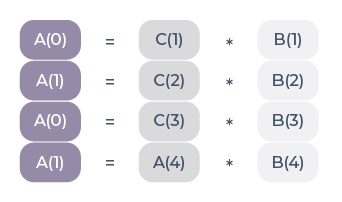

Read after write – a variable get a new value that will be used in the one of next instructions. An example is given by the sequence in the next image. Each processing step requires a result of previous processing step.

Write after read – a variable value is used before modification. This case is safe for vectorization.

Read after read – a variable is read several times in consequence without change of its value. This case is safe for vectorization.

Write after write – represents data dependencies in which a variable value is changed by one instruction and there is another instruction in a sequence that modifies the variable.

Examples of optimized libraries

- Ffmpeg – MP3, AAC, AC3, AC3+

- H.264 decoder

- AMR NB/WB

- Tremolo library

- SILK codec

- Dolby Intrinsics

- SKIA

- Pixman

- Libpng

- libjpeg-turbo

- VP6

- Adobe Flash Player

- VP8

- WebP

- WebAudio

- WebRTC

- BoringSSL

- OpenVx

- OpenMax FFT

Conclusion

Cooperation with Google and the open source community have thought us how to work on big, long lasting and geographically distributed projects.

Versatility and longevity of work on these topics has upskilled the RT-RK engineering power house becoming a backbone of our system software development know-how.